Introduction

In the ever-evolving domain of IT infrastructure, server profiling plays a critical role in maintaining performance, security, and scalability. Server profiling is the process of analyzing the behavior, permissions, and operations of a server to ensure that each application deployed on it behaves as expected, remains secure, and adheres to organizational standards. For professionals and learners navigating this domain, especially those preparing for certification paths such as those offered by DumpsArena, understanding the fundamentals of what defines application behavior on servers is essential. A recurring question in this context is: “In profiling a server, what defines what an application is allowed to do or run on a server?”

This question isn’t just theoretical; it lies at the heart of server administration, cybersecurity, and compliance management. To understand the depth of this concept, one must explore the interplay between operating systems, access control lists, policy enforcements, application permissions, and security frameworks that dictate how and what an application can execute on a server.

This article from DumpsArena breaks down this critical concept in an exhaustive yet approachable format—tailored for IT professionals, students, and enthusiasts alike—by elaborating on the fundamental elements that define application behavior within the realm of server profiling.

The Core of Server Profiling: Understanding the Concept

Server profiling encompasses the detailed inspection and monitoring of software components, configurations, resource allocations, and executable permissions on a server. It provides a lens into how server applications interact with the underlying operating system and external entities.

Profiling helps administrators answer vital questions like:

-

What is this application doing?

-

Is it consuming more resources than expected?

-

Is it communicating with authorized systems only?

-

What files and libraries is it accessing?

-

Is it exhibiting behaviors consistent with its expected functionality?

At its core, server profiling isn’t just about performance; it’s a security gatekeeper. It allows IT teams to detect anomalies, isolate malicious behavior, and enforce a strict operational scope. For example, if an application designed to serve web content suddenly attempts to write system-level files or execute shell commands, that’s a red flag.

In this ecosystem, what defines what an application is allowed to do involves several layered components—from configuration files to user permissions, from security modules to sandboxing policies.

The Role of Application Permissions and Access Control Mechanisms

One of the most foundational pillars that define application behavior is permissions. These permissions, configured at both the system and application levels, dictate what actions an application can undertake—whether it can read files, execute scripts, access network resources, or modify configurations.

At the operating system level, Access Control Lists (ACLs) and Discretionary Access Control (DAC) models govern these permissions. These systems determine:

-

Which user or process owns the application

-

What groups the application belongs to

-

What permissions are granted (read, write, execute)

In UNIX-like systems, for instance, applications inherit the permissions of the user under which they run. A web server running as www-data may not be able to access system files owned by root, unless explicitly allowed. Thus, the identity and associated privileges of the application process form a fundamental rulebook for what it can and cannot do.

Beyond DAC, more advanced mechanisms like Mandatory Access Control (MAC) introduce stricter policies. Technologies such as SELinux (Security-Enhanced Linux) and AppArmor in Linux environments implement MAC to restrict applications based on labeled policies. These policies are central in defining what behavior is permissible—even if the application has traditional permissions. If SELinux policy forbids a web server from accessing certain directories, that restriction is absolute, irrespective of file-level permissions.

Application Sandboxing and Isolation Techniques

In modern server environments, especially cloud-based and containerized infrastructures, sandboxing plays a vital role in restricting application behavior. Sandboxing confines an application to a controlled environment where its interaction with the system is limited.

Containers (e.g., Docker), virtual machines, and microVMs like Firecracker allow applications to run in isolated spaces. This approach defines:

-

Which system calls an application can make

-

What network ports it can access

-

Whether it can interact with hardware devices

-

What host resources (CPU, memory, disk) it can consume

Tools like seccomp (secure computing mode) in Linux provide fine-grained control over what system calls are allowed by applications. This means an administrator can explicitly block file deletion, socket creation, or process spawning for an application, thus defining its behavioral boundaries.

This level of isolation is especially important in multi-tenant systems where different applications—often from different clients—run on the same server infrastructure. By sandboxing applications, profiling tools and system policies ensure that even if an application is compromised, its ability to impact the broader system is minimized.

Security Policies and Enforcement Modules

Security policies function like contracts between the system and applications. These policies are defined by system administrators or security teams and are enforced using kernel-level or middleware-level modules.

For example:

-

SELinux uses a policy language to specify allowed interactions between processes, files, and ports.

-

AppArmor uses profile-based rules to bind programs to their expected behaviors.

-

Windows Server environments utilize Group Policy Objects (GPOs) to enforce user and application restrictions at domain level.

These policies may define:

-

Which files or directories an application can access

-

Whether it can initiate outbound network connections

-

If it can fork child processes

-

Whether it can execute certain binary types

The beauty of such systems lies in their granularity. A policy can state that an application may read from /var/log/myapp/ but not write to it. Or that a script may run only if it passes a checksum validation. These rules create a clear definition of what is “allowed” versus what is “denied,” thus giving administrators a blueprint for profiling and securing applications on servers.

Profiling with Behavior Monitoring and Anomaly Detection

Another essential tool in defining what an application is allowed to do comes from real-time behavior monitoring. Profiling isn't just about setting rules—it’s also about observing execution.

Tools like:

-

Auditd (Linux audit daemon)

-

Sysmon (Windows System Monitor)

-

OSSEC, Tripwire, and Falco

... allow administrators to capture:

-

System calls made by applications

-

Files accessed or modified

-

Network destinations contacted

-

Processes spawned and their execution hierarchy

Over time, these tools build a behavior profile for each application. This profile helps define the "normal" activity range. If an application attempts an operation outside its historical behavior—like writing to a new directory or executing shell commands—profiling tools can flag this as an anomaly. In advanced security setups, such deviations can trigger automated responses like terminating the process or alerting the security operations center (SOC).

This adaptive profiling mechanism goes beyond static policy enforcement. It uses machine learning or statistical baselines to continuously evolve the understanding of what the application is allowed—or expected—to do.

Containerization and Role-Based Access Control (RBAC)

With the rise of container orchestration platforms like Kubernetes, defining application behavior has expanded into new paradigms. Kubernetes and similar systems use Role-Based Access Control (RBAC) to define permissions for applications (running as pods or services) in a cluster.

RBAC policies specify:

-

What actions (verbs) an application can take (e.g., get, list, create)

-

On which resources (e.g., secrets, configmaps, pods)

-

Within which namespace or context

This modular control mechanism helps isolate workloads and prevent privilege escalation. Combined with PodSecurityPolicies, NetworkPolicies, and Admission Controllers, Kubernetes offers a sophisticated system to define and enforce what applications can do within the cluster.

Applications running inside a Kubernetes pod, for example, can be configured to only:

-

Access specific ConfigMaps

-

Mount approved volumes

-

Communicate over allowed service meshes

-

Perform certain API calls

Thus, profiling within containerized ecosystems involves orchestrated configurations that translate into actionable permission structures.

Code Integrity and Whitelisting Mechanisms

Another major aspect of defining what an application can run or execute on a server is code integrity verification. This process ensures that only approved, signed, or trusted applications are allowed to run.

Operating systems and endpoint protection solutions implement this through:

-

Application whitelisting

-

Code signing

-

Digital certificate verification

In Microsoft environments, for example, Windows Defender Application Control (WDAC) enforces application execution policies by verifying signatures. Similarly, Linux Integrity Measurement Architecture (IMA) can block the execution of binaries that have been modified or are unsigned.

These mechanisms help ensure that applications can only perform operations that are associated with known, trusted codebases. They prevent unauthorized scripts, backdoors, or malware from running—thus solidifying the definition of “what an application is allowed to do.”

Configuration Management and Deployment Policies

Defining application behavior also relies heavily on how the software is deployed and configured. Configuration management tools like:

-

Ansible

-

Puppet

-

Chef

-

SaltStack

... help enforce declarative states. For instance, you can define that a server should always run a specific version of a package, with certain configurations, and under specified user permissions.

These tools ensure consistency in deployments and allow for server profiles to be version-controlled and auditable. If an application deviates from its expected deployment profile, the configuration management tool can automatically revert it—thereby limiting the scope of unauthorized changes.

Integrating Server Profiling into CI/CD Pipelines

In DevOps practices, server profiling and permission definitions have shifted left—into the development and deployment pipelines. During CI/CD execution, automated scripts perform static and dynamic analysis to check for:

-

Over-permissive configurations

-

Use of unauthorized libraries

-

Violation of compliance policies

-

Excessive network or filesystem access

Security testing tools (SAST, DAST, IAST) flag issues during build or test stages. As a result, before an application even reaches a production server, its capabilities are profiled and refined based on established policy frameworks.

Thus, CI/CD pipelines act as the first layer of defining application capabilities, preventing misconfigured or insecure software from being deployed to live servers.

Conclusion

In profiling a server, what defines what an application is allowed to do or run on a server is the culmination of several interdependent systems and policies—from operating system-level permissions and sandboxing to advanced security modules, behavioral analytics, and configuration management tools. Each layer plays a unique role in building a secure, predictable, and enforceable execution environment.

For professionals studying through DumpsArena and preparing for certifications that require mastery of system administration and cybersecurity concepts, this understanding is vital. It is not enough to deploy or manage an application; one must know how to profile, define, and enforce its behavior to safeguard the server and the broader network ecosystem.

By mastering these principles, IT professionals ensure that applications behave as intended—and nothing more—preserving both the security and integrity of the systems they manage.

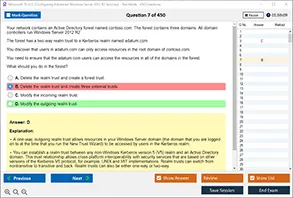

Which of the following best describes Mandatory Access Control (MAC) in server environments?

A. A model where users set permissions for their own resources

B. A flexible access system based on user roles

C. A strict policy-enforcement model that restricts access based on predefined rules

D. A default setting for all Windows-based systems

What is the primary purpose of sandboxing an application on a server?

A. To allow unlimited system resource access

B. To improve network throughput

C. To isolate the application and limit its system interactions

D. To bypass server firewalls for debugging purposes

Which Linux security module is commonly used to enforce strict access policies for applications?

A. TCPDump

B. SELinux

C. Nmap

D. Netcat

What role does Role-Based Access Control (RBAC) play in containerized environments?

A. Encrypting data in storage volumes

B. Managing access to resources based on user and service roles

C. Monitoring server CPU usage

D. Managing firewall configurations

Which tool helps monitor real-time application behavior for profiling purposes on Windows systems?

A. Sysmon

B. Git

C. curl

D. Telnet

In a Linux environment, what does the ‘seccomp’ tool primarily restrict?

A. File permissions for users

B. System calls an application can make

C. Network access between applications

D. Boot order of the operating system

What is the function of a configuration management tool like Ansible in application profiling?

A. Encrypting user traffic

B. Enforcing consistent application states and deployments

C. Monitoring internet bandwidth

D. Automatically generating server passwords

Which method ensures only trusted applications or code can run on a server?

A. MAC address filtering

B. Static code analysis

C. Application whitelisting

D. Port scanning

What component in Kubernetes controls what a pod or application can do within the cluster?

A. Network Load Balancer

B. Helm Chart

C. Role-Based Access Control (RBAC)

D. Node Affinity

What is one of the main benefits of integrating profiling policies into CI/CD pipelines?

A. Slowing down application deployment for testing

B. Allowing developers unrestricted system access

C. Detecting security issues before applications reach production

D. Avoiding use of version control systems